The CEO of ChatGPT-maker OpenAI said that the dangers that keep him awake at night regarding artificial intelligence are the “very subtle societal misalignments” that could make the systems wreak havoc.

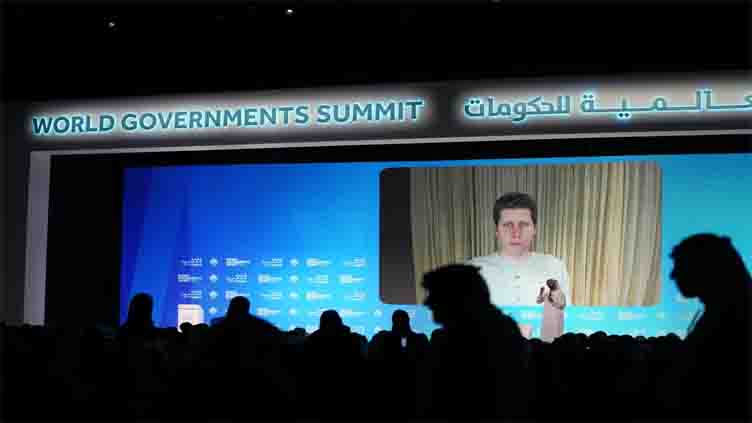

Sam Altman, speaking at the World Governments Summit in Dubai via a video call, reiterated his call for a body like the International Atomic Energy Agency to be created to oversee AI that’s likely advancing faster than the world expects.

“There’s some things in there that are easy to imagine where things really go wrong. And I’m not that interested in the killer robots walking on the street direction of things going wrong,” Altman said.

“I’m much more interested in the very subtle societal misalignments where we just have these systems out in society and through no particular ill intention, things just go horribly wrong.”

However, Altman stressed that the AI industry, like OpenAI, shouldn’t be in the driver’s seat when it comes to making regulations governing the industry.

“We’re still in the stage of a lot of discussion. So there’s you know, everybody in the world is having a conference. Everyone’s got an idea, a policy paper, and that’s OK,” Altman said.

“I think we’re still at a time where debate is needed and healthy, but at some point in the next few years, I think we have to move towards an action plan with real buy-in around the world.”

OpenAI, a San Francisco-based artificial intelligence startup, is one of the leaders in the field. Microsoft has invested billions of dollars in OpenAI.

The Associated Press has signed a deal with OpenAI for it to access its news archive. Meanwhile, The New York Times has sued OpenAI and Microsoft over the use of its stories without permission to train OpenAI’s chatbots.

OpenAI’s success has made Altman the public face for generative AI’s rapid commercialization — and the fears over what may come from the new technology.

The UAE, an autocratic federation of seven hereditarily ruled sheikhdoms, has signs of that risk. Speech remains tightly controlled. Those restrictions affect the flow of accurate information, the same details AI programs like ChatGPT rely on as machine-learning systems to provide their answers for users.

The Emirates also has the Abu Dhabi firm G42, overseen by the country’s powerful national security adviser. G42 has what experts suggest is the world’s leading Arabic-language artificial intelligence model.

The company has faced spying allegations for its ties to a mobile phone app identified as spyware. It has also faced claims it could have gathered genetic material secretly from Americans for the Chinese government.

G42 has said it would cut ties to Chinese suppliers over American concerns. However, the discussion with Altman, moderated by the UAE’s Minister of State for Artificial Intelligence Omar al-Olama, touched on none of the local concerns.

For his part, Altman said he was heartened to see that schools, where teachers feared students would use AI to write papers, now embrace the technology as crucial for the future. But he added that AI remains in its infancy.

“I think the reason is the current technology that we have is like … that very first cellphone with a black-and-white screen,” Altman said.

“So give us some time. But I will say I think in a few more years it’ll be much better than it is now. And in a decade it should be pretty remarkable.”

Post Views: 40

Sports3 months ago

Sports3 months ago

Sports3 months ago

Sports3 months ago

Fashion3 months ago

Fashion3 months ago

pakistan3 months ago

pakistan3 months ago

World3 months ago

World3 months ago

pakistan3 months ago

pakistan3 months ago

World3 months ago

World3 months ago

Sports2 months ago

Sports2 months ago